AB: AI Ethics and Regulation: How Investors Can Navigate the Maze

From potentially brand-damaging ethical risks to regulatory uncertainty, AI poses challenges for investors. But there is a path forward.

Published 06-19-24

Submitted by AllianceBernstein

By Saskia Kort-Chick| Director of Social Research and Engagement—Responsibility and Jonathan Berkow| Director of Data Science—Equities

Artificial intelligence (AI) poses many ethical issues that can translate into risks for consumers, companies and investors. And AI regulation, which is developing unevenly across multiple jurisdictions, adds to the uncertainty. The key for investors, in our view, is to focus on transparency and explainability.

The ethical issues and risks of AI begin with the developers who create the technology. From there, they flow to the developers’ clients—companies that integrate AI into their businesses—and on to consumers and society more broadly. Through their holdings in AI developers and companies that use AI, investors are exposed to both ends of the risk chain.

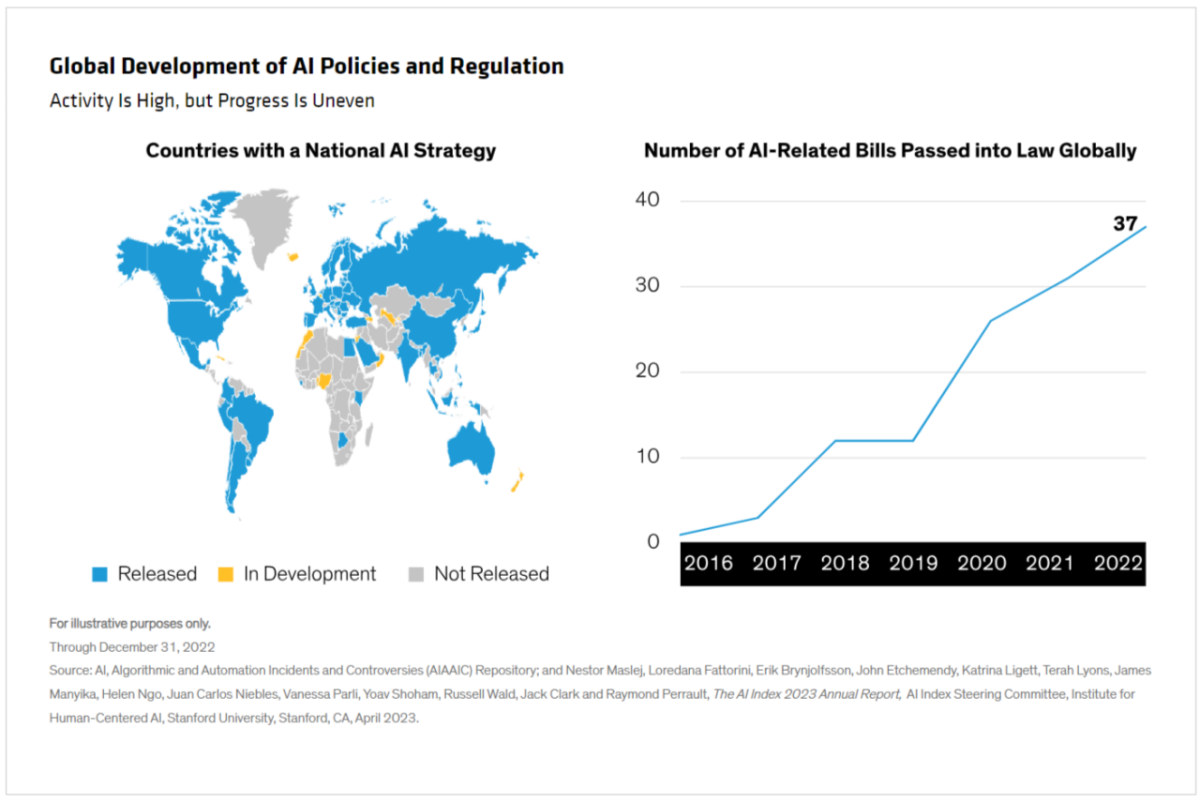

AI is developing quicky, far ahead of most people’s understanding of it. Among those trying to catch up are global regulators and lawmakers. At first glance, their activity in the AI area has grown quickly in the last few years; many countries have released related strategies and others are close to introducing them (Display).

In reality, the progress has been uneven and is far from complete. There is no uniform approach to AI regulation across jurisdictions, and some countries introduced their regulations before ChatGPT launched in late 2022. As AI proliferates, many regulators will need to update and possibly expand the work they’ve already done.

For investors, the regulatory uncertainty compounds AI’s other risks. To understand and assess how to deal with these risks, it helps to have an overview of the AI business, ethical and regulatory landscape.

Data Risks Can Damage Brands

AI involves an array of technologies directed toward performing tasks normally done by humans and performing them in a human-like way. AI and business can intersect through generative AI, which includes various forms of content generation, including video, voice, text and music; and large language models (LLMs), a subset of generative AI focused on natural language processing. LLMs serve as foundational models for various AI applications—such as chatbots, automated content creation, and analyzing and summarizing large volumes of information—that companies are increasingly using in their customer engagement.

As many companies have found, however, AI innovations may involve potentially brand-damaging risks. These can arise from biases inherent in the data on which LLMs are trained and have resulted, for example, in banks inadvertently discriminating against minorities in granting home-loan approvals, and in a US health insurance provider facing a class-action lawsuit alleging that its use of an AI algorithm caused extended-care claims for elderly patients to be wrongfully denied.

Bias and discrimination are just two of the risks that regulators target and that should be on investors’ radars; others include intellectual property rights and privacy considerations concerning data. Risk-mitigation measures—such as developer testing of the performance, accuracy and robustness of AI models, and providing companies with transparency and support in implementing AI solutions—should also be scrutinized.

Dive Deep to Understand AI Regulations

The AI regulatory environment is evolving in different ways and at different speeds across jurisdictions. The most recent developments include the European Union (EU)’s Artificial Intelligence Act, which is expected to come into force around mid-2024, and the UK government’s response to a consultation process triggered last year by the launch of the governmemt’s AI regulation white paper.

Both efforts illustrate how AI regulatory approaches can differ. The UK is adopting a principles-based framework that existing regulators can apply to AI issues within their respective domains. In contrast, the EU act introduces a comprehensive legal framework with risk-graded compliance obligations for developers, companies, and importers and distributors of AI systems.

Investors, in our view, should do more than drill down into the specifics of each jurisdiction’s AI regulations. They should also familiarize themselves with how jurisdictions are managing AI issues using laws that predate and stand outside AI-specific regulations—for example, copyright law to address data infringements and employment legislation in cases where AI has an impact on labor markets.

Fundamental Analysis and Engagement Are Key

A good rule of thumb for investors trying to assess AI risk is that companies that proactively make full disclosures about their AI strategies and policies are likely to be well prepared for new regulations. More generally, fundamental analysis and issuer engagement—the basics of responsible investment—are crucial to this area of research.

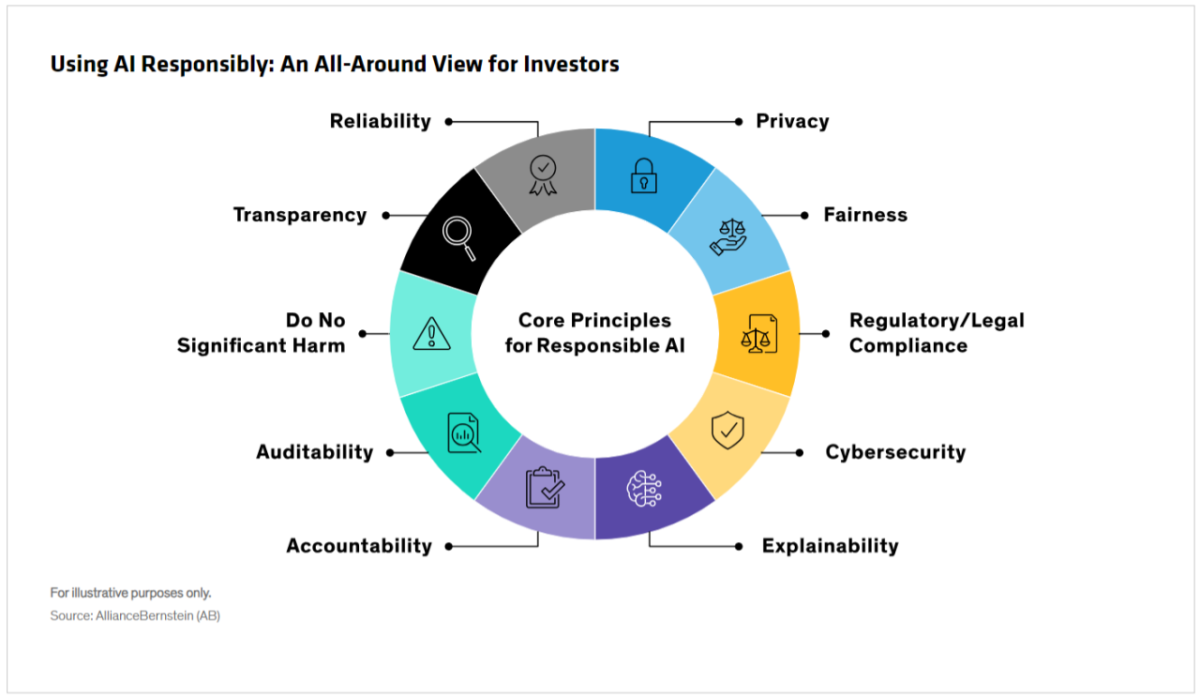

Fundamental analysis should delve not only into AI risk factors at the company level but also along the business chain and across the regulatory environment, testing insights against core responsible-AI principles (Display).

Engagement conversations can be structured to cover AI issues not only as they affect business operations, but from environmental, social and governance perspectives, too. Questions for investors to ask boards and management include the following:

- AI integration: How has the company integrated AI into its overall business strategy? What are some specific examples of AI applications within the company?

- Board oversight and expertise: How does the board ensure it has sufficient expertise to effectively oversee the company’s AI strategy and implementation? Are there any specific training programs or initiatives in place?

- Public commitment to responsible AI: Has the company published a formal policy or framework on responsible AI? How does this policy align with industry standards, ethical AI considerations, and AI regulation?

- Proactive transparency: Does the company have any proactive transparency measures in place to withstand future regulatory implications?

- Risk management and accountability: What risk management processes does the company have in place to identify and mitigate AI-related risks? Is there delegated responsibility for overseeing these risks?

- Data challenges in LLMs: How does the company address privacy and copyright challenges associated with the input data used to train large language models? What measures are in place to ensure input data is compliant with privacy regulations and copyright laws, and how does the company handle restrictions or requirements related to input data?

- Bias and fairness challenge in generative AI systems: What steps does the company take to prevent and/or mitigate biased or unfair outcomes from its AI systems? How does the company ensure that the output of any generative AI systems used are fair, unbiased, and do not perpetuate discrimination or harm to any individual or group?

- Incident tracking and reporting: How does the company track and report on incidents related to its development or use of AI, and what mechanisms are in place for addressing and learning from these incidents?

- Metrics and Reporting: What metrics does the company use to measure the performance and impact of its AI systems, and how are these metrics reported to external stakeholders? How does the company maintain due diligence in monitoring the regulatory compliance of its AI applications?

Ultimately, the best way for investors to find their way through the maze is to stay grounded and skeptical. AI is a complex and fast-moving technology. Investors should insist on clear answers and not be unduly impressed by elaborate or complicated explanations.

The authors would like to thank Roxanne Low, ESG Analyst with AB’s Responsible Investing team, for her research contributions.

The views expressed herein do not constitute research, investment advice or trade recommendations and do not necessarily represent the views of all AB portfolio-management teams. Views are subject to revision over time.

Learn more about AB’s approach to responsibility here.

AllianceBernstein

AllianceBernstein

AllianceBernstein (AB) is a leading global investment management firm that offers diversified investment services to institutional investors, individuals, and private wealth clients in major world markets.

To be effective stewards of our clients’ assets, we strive to invest responsibly—assessing, engaging on and integrating material issues, including environmental, social and governance (ESG) considerations into most of our actively managed strategies (approximately 79% of AB’s actively managed assets under management as of December 31, 2024).

Our purpose—to pursue insight that unlocks opportunity—describes the ethos of our firm. Because we are an active investment manager, differentiated insights drive our ability to design innovative investment solutions and help our clients achieve their investment goals. We became a signatory to the Principles for Responsible Investment (PRI) in 2011. This began our journey to formalize our approach to identifying responsible ways to unlock opportunities for our clients through integrating material ESG factors throughout most of our actively managed equity and fixed-income client accounts, funds and strategies. Material ESG factors are important elements in forming insights and in presenting potential risks and opportunities that can affect the performance of the companies and issuers that we invest in and the portfolios that we build. AB also engages issuers when it believes the engagement is in the best financial interest of its clients.

Our values illustrate the behaviors and actions that create our strong culture and enable us to meet our clients' needs. Each value inspires us to be better:

- Invest in One Another: At AB, there’s no “one size fits all” and no mold to break. We celebrate idiosyncrasy and make sure everyone’s voice is heard. We seek and include talented people with diverse skills, abilities and backgrounds, who expand our thinking. A mosaic of perspectives makes us stronger, helping us to nurture enduring relationships and build actionable solutions.

- Strive for Distinctive Knowledge: Intellectual curiosity is in our DNA. We embrace challenging problems and ask tough questions. We don’t settle for easy answers when we seek to understand the world around us—and that’s what makes us better investors and partners to our colleagues and clients. We are independent thinkers who go where the research and data take us. And knowing more isn’t the end of the journey, it’s the start of a deeper conversation.

- Speak with Courage and Conviction: Collegial debate yields conviction, so we challenge one another to think differently. Working together enables us to see all sides of an issue. We stand firmly behind our ideas, and we recognize that the world is dynamic. To keep pace with an ever changing world and industry, we constantly reassess our views and share them with intellectual honesty. Above all, we strive to seek and speak truth to our colleagues, clients and others as a trusted voice of reason.

- Act with Integrity—Always: Although our firm is comprised of multiple businesses, disciplines and individuals, we’re united by our commitment to be strong stewards for our people and our clients. Our fiduciary duty and an ethical mind-set are fundamental to the decisions we make.

As of December 31, 2024, AB had $792B in assets under management, $555B of which were ESG-integrated. Additional information about AB may be found on our website, www.alliancebernstein.com.

Learn more about AB’s approach to responsibility here.

More from AllianceBernstein